‘All models are false; some models are useful.’*

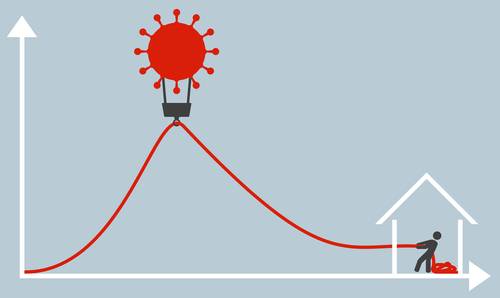

The COVID-19 pandemic has turned all of us into amateur epidemiologists. We follow obsessively the daily briefings of political leaders and public health experts, track the latest figures on hospital deaths and newly confirmed infections, and search desperately for any sign that the curve is flattening. In our darkest moments, we try to make sense of mortality projections for the coming months that vary widely and seem to change almost daily.

In all of this, it pays to reflect on what models like those used by epidemiologists can and cannot tell us. In banking and capital markets, we have enormous experience with models and have learned valuable lessons about effective modeling and model risk management. Those same lessons are familiar to our colleagues in epidemiology.

Models apply theory and expert judgment to process input data in order to produce quantitative estimates. All models are simplifications, and none can capture every aspect of reality; in this sense, all models are false. We use these simplifications to help us analyze difficult problems with complex interactions in a manageable way. What we expect from a model is not an invariant picture of a deterministic future: a model is not a crystal ball. Instead, a model is a tool for understanding how a system works and how its outcome might be affected by changes in assumptions and input data or by new interventions and controls.

In modeling the progress of COVID-19, epidemiologists face enormous challenges with respect to both assumptions and data. What value should be assumed for the attack rate (i.e., the percentage of an at-risk population such as a household who become infected when exposed to the disease)? What is the incubation period for the disease, and over what period are those who are infected contagious? How much more infectious are symptomatic individuals than asymptomatic individuals, and how variable is individual infectiousness? Are individuals who have recovered from infection free from re-infection in the short term? None of these variables is known with certainty, but small variations in any of them may result in large changes in projected mortality. A model cannot tell us for certain what will happen. But it can help us understand how the pieces of the puzzle fit together, so that we can check how robust our projections are to changed assumptions and refine our projections as we acquire new data.

One of the most critical factors in the spread of infectious disease is social interaction. Here too models are essential in assessing mitigation or suppression strategies that try to reduce mortality by reducing the reproduction number (i.e., the average number of secondary cases that each case generates). Models cannot tell us whether to adopt more aggressive social distancing measures or to close schools, but they can help us assess the impact of doing so.

The use of models inevitably gives rise to model risk. Flawed assumptions, faulty parameter estimates, inadequate data, and other sources of model risk will lead to errors. In capital markets, we have learned that controlling this risk requires careful attention to process. We think carefully about a model’s purpose and the problems for which it is suitable. We test and subject it to independent review at every stage of its development. We monitor and review it in use, comparing its projections to observed outcomes. As we obtain new data or acquire an improved understanding of the process that the model represents, we modify the model.

Our colleagues in epidemiology face the same problems and employ a similar process. Like us, they understand that a model is not an answer, but a tool for asking questions.

*Box, G. E. P., Hunter, J. S., and Hunter, W. G. Statistics for Experimenters: Design, Innovation and Discovery, 2nd Ed., Hoboken, NJ: Wiley, 2005, p. 440.

My Cart

My Cart